Machine Learning Hackathon: AvaJams

Last weekend was the first Recurrent Neural Hacks! hackathon. Our team, AvaJams, built a product that creates a video mashup of your video with a matching music video.

Goal

The goal of the team was to create a fun music-oriented app that uses machine learning. Our final app generates mashups of a user’s uploaded video with a similar music video.

Execution

Since there is very little time to waste in a 24h hackathon, we set out to build a pipeline that can generate fun results within the least amount of time, but is extensible if there is enough time left.

The first challenge was to create a library of music videos to use in mashups. Initially, we had the ambitious goal to use a library of 36k music videos. Getting our hands on such large amount of videos took more time than anticipated, so we ended up using far fewer videos than that.

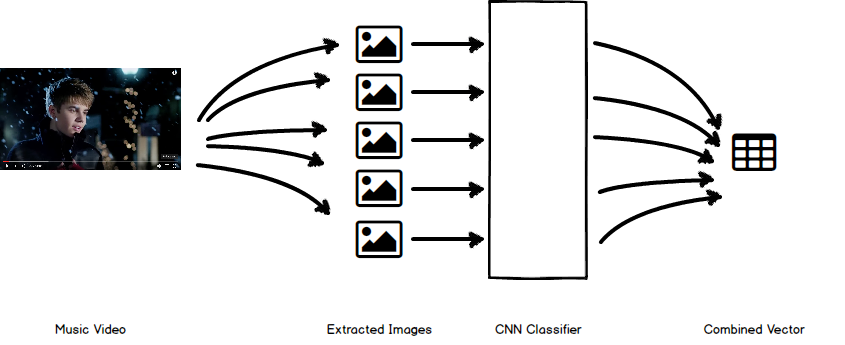

The second challenge was to match videos based on their similarity, which is what I spent much of my time on. Given the limited amount of time and sample data, we could not reasonably expect to train a neural net on raw data. Therefore, we came up with the following idea to match the videos:

- Extract one jpeg per second from the video

- Run an off-the-shelf pretrained CNN on the jpegs to extract classes

- Combine that output to create one vector per video

- Match videos by finding the nearest neighbor using that vector

In step 2 we used Google’s Inception v3 trained on CIFAR-1000. The big drawback here was that videos would only be matched on classes found in LSVRC-2012, which includes common objects such as “desk”, “lemon” and “soup bowl”. I’m not entirely sure how many music videos prominently feature soup bowls. Therefore, when running the Inception model, we extracted both these classes as well as the preceding fully connected layer. If there was enough time left at the end we could add a final layer that would go beyond object classification and match on more suitable characteristics … There was not enough time left at the end.

Finally, we used the user’s video and music video to create a mashup. Thanks to MoviePy that was a breeze. The mashup would play the music video’s track continuously, while the imagery would switch between the user video and music video every 2 seconds. The result was surprisingly cool.

The final piece of the puzzle was a nice user frontend. This was built using Flask and a bootstrap template.

Result

The final result was a working website that mashes up a user uploaded video with a matching music video. As a test, I shot a short video walking around the hackathon at 1AM. I have no idea why, but this video got matched with a Justin Bieber christmas song. You can’t make that stuff up. Clearly this result went into the final pitch. We came in at the 2nd place out of 12 teams.